Abstract

In this poster, we introduce Flotilla, a modular, model-agnostic Federated Learning (FL) framework that supports synchronous client-selection and aggregation strategies, and FL model deployment and training on edge client clusters, with telemetry for advanced systems research.

Framework Architecture

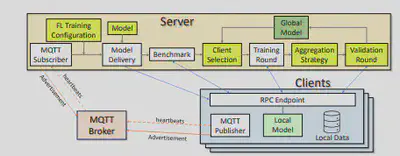

Figure 1. Framework architecture. Source: FedML framework, HIPC-SRS 2023.

Flotilla is designed to be modular and model-agnostic, enabling researchers to plug in different client selection and aggregation strategies for Federated Learning (FL). It supports edge-based client clusters like Raspberry Pis and integrates telemetry for advanced experimentation and systems-level insight. The framework is implemented in Python and provides abstraction layers for server orchestration, model deployment, and synchronous FL rounds.

Experimental Insights

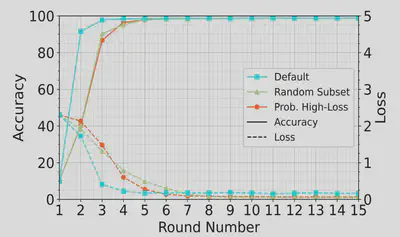

We evaluated different client selection strategies in a lab testbed comprising homogeneous Raspberry Pi devices connected via Gigabit LAN. In an IID data setting, where local models are inherently aligned, the Default strategy showed the fastest convergence due to receiving the highest number of updates per round.

Random Selection (RS) and Partial Heterogeneous Loss (PHL) performed similarly, as client validation losses were nearly identical across rounds. The absence of stragglers ensured that training durations remained consistent across clients. However, PHL showed periodic increases in training time due to validation overhead every alternate round.

The model converged in 100 FL rounds, reaching 98.4% accuracy, with an average round time of 375 seconds.

Figure 2. Results. Source: FedML framework, HIPC-SRS 2023.

Press

- “Towards a Modular Federated Learning Framework on Edge Devices” at HiPC 2023 Student Research Symposium